Apple unveiled new AI capabilities at WWDC 2025, including Live Translation, Workout Buddy for Apple Watch, ChatGPT support for Visual Intelligence, changes to Genmoji and Image Playground experiences, and more. Third-party developers can access on-device models free of charge using the Foundation Models framework, and Visual Intelligence will now support ChatGPT. These enhancements are planned to be introduced later this year.

In This Article

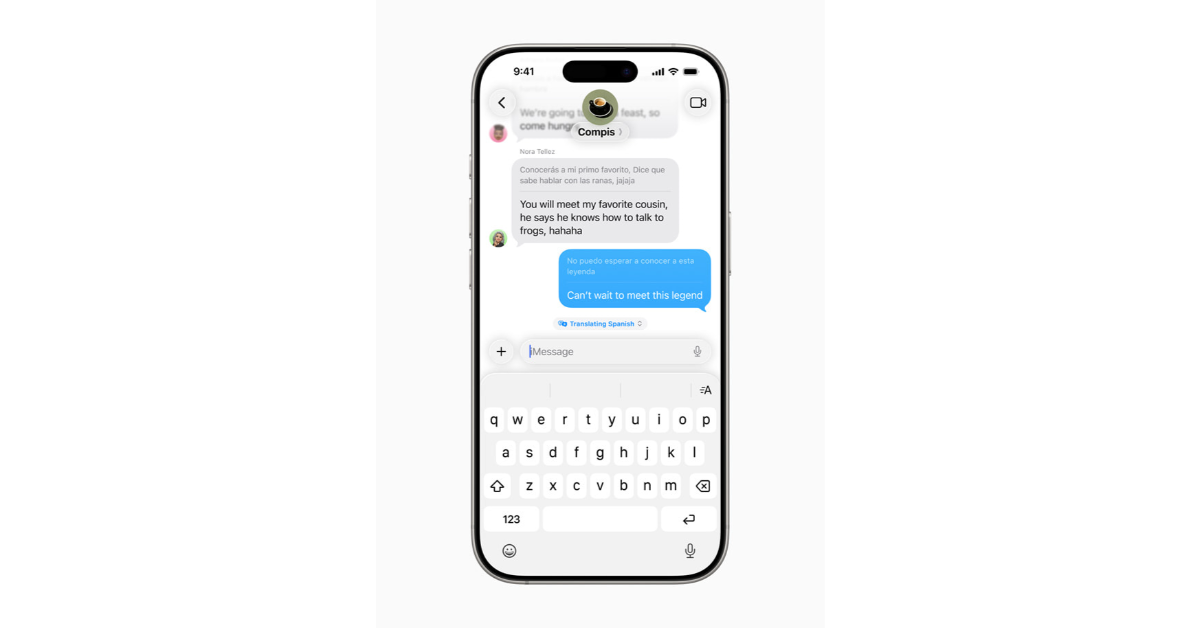

Live Translation

Live Translation is a tool that removes language barriers by allowing users to converse across languages via message or speech. It is embedded into Messages, FaceTime, and Phone, and is supported by Apple-designed models that operate wholly on smartphones. Messages’ Live Translation immediately translates messages, allowing users to establish arrangements with new acquaintances when travelling overseas. Users using FaceTime conversations may follow along with translated live subtitles while still hearing the speaker’s voice. During phone conversations, the translation is spoken aloud.

Genmoji and Image Playground

The Genmoji and Image Playground apps have been upgraded to provide users with more creative expression choices. Users may now convert text descriptions into Genmojis and combine them with emojis to create unique visuals. They may even change personal characteristics, such as hairstyles, to reflect their friends’ current looks, allowing users to generate unique and entertaining content.

Also Read: WWDC25: Apple’s latest iOS 26 brings a lot of improvements, a new Liquid Glass design and more

Image Playground allows users to experiment with new ChatGPT styles, such as oil painting or vector art, as well as generate unique graphics based on a specific idea by pressing “Any Style.” Users may email their description or photo to ChatGPT, retaining control and guaranteeing that nothing is released without their consent.

ChatGPT integration in Visual Intelligence

Apple’s visual intelligence extends to iPhone displays, allowing users to search and interact with information. This technology, based on Apple Intelligence, enables users to learn about items and locations with their camera, ask ChatGPT questions, search for comparable photographs and products on applications such as Google and Etsy, and mark objects of interest for internet searches.

Apple’s visual intelligence technology identifies when a user is seeing an event and proposes that they add it to their calendar. It extracts the date, time, and location and prepopulates them into an event. Users may access visual intelligence by tapping the same buttons that they use to take a screenshot, save or share it, and explore more.

Workout Buddy in Apple Watch

Apple Intelligence has extended to include the fitness app Workout Buddy for Apple Watch users. The app offers personalised motivational insights based on the user’s workout statistics and fitness history. The app examines data such as heart rate, pace, distance, activity rings, and personal fitness goals. It then employs a text-to-speech technology to convert insights into a dynamic generative voice based on Fitness+ coaches’ voice data. Apple Intelligence processes data privately and securely.

Also Read: iPadOS 26 Debuts: A Window to a Smarter, More Creative iPad Experience

Workout Buddy, an app for Apple Watch with Bluetooth headphones, will be accessible in English and will support a variety of workout activities, including outdoor and indoor runs, outdoor and indoor walks, outdoor cycling, HIIT, and functional and traditional strength training.

Apple Intelligence On-Device Model

Apple has released the on-device base model of its Intelligence platform to developers. The Foundation Models framework enables app developers to build advanced, offline experiences utilising AI inference at no cost. This may be used in education applications to provide personalised quizzes, or in outdoor apps to offer natural language search features.

The framework is natively supported in Swift, allowing developers to access the model with only three lines of code. It also has guided generation, tool calling, and other features that make it easy to incorporate generative capabilities into present applications. This change is intended to preserve consumers’ privacy and improve their experiences.

Also Read: WWDC 2025: Apple unveils watchOS 26 with Liquid Glass design

AI capabilities on Shortcuts

Apple Intelligence has increased the power and intelligence of shortcuts, allowing users to trigger intelligent actions for features such as text summarisation and image generation. Users can now employ Apple Intelligence models on-device or with Private Cloud Compute to produce replies that feed into their shortcut while keeping their privacy. For example, a student can set up a shortcut to compare audio transcriptions of a class lecture to notes and add missing crucial points. Users can also utilise ChatGPT to offer replies that are incorporated into their shortcut.